Discussions

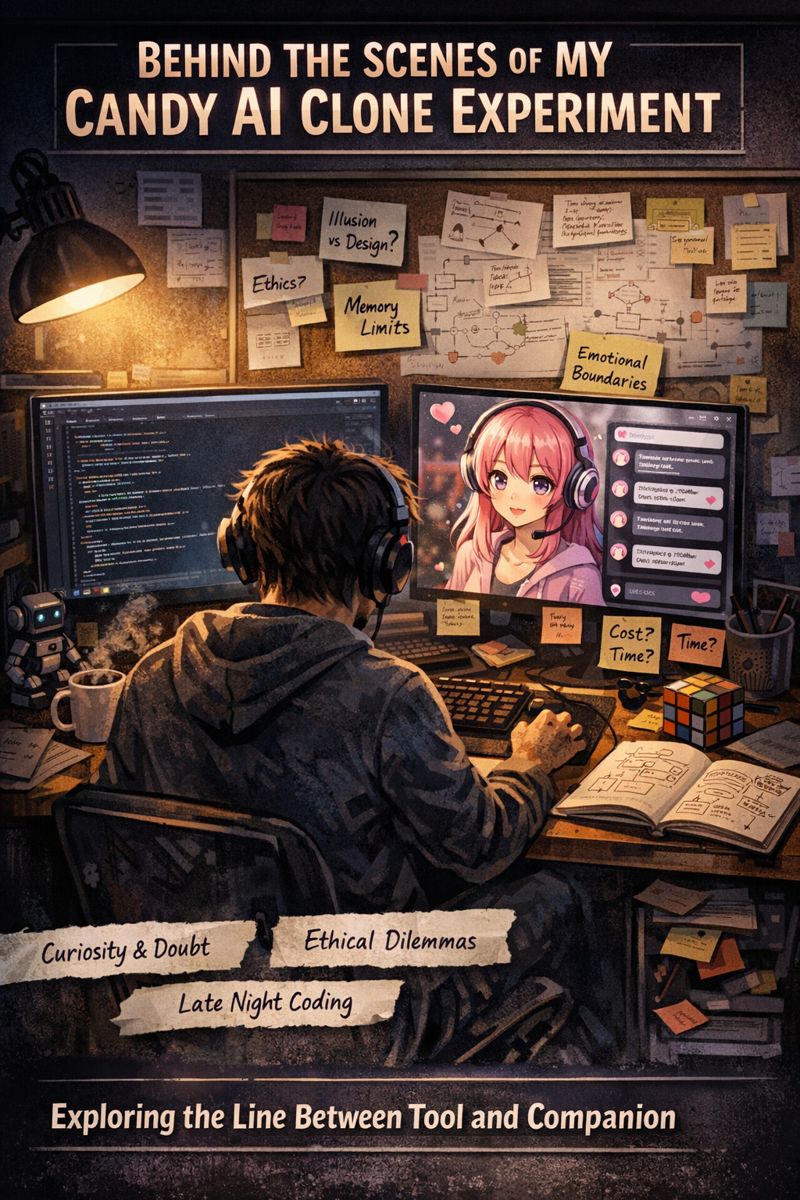

Behind the Scenes of My Candy AI Clone Experiment

The idea didn’t arrive as a business plan. It arrived as a feeling.

Late one night, half-curious and half-restless, I found myself talking to an AI chatbot that felt oddly… present. Not intelligent in a sci-fi sense, but responsive enough to blur the line between script and personality. I closed the tab and sat there thinking, What if I tried to build something like this myself not to sell it, not to launch it, just to understand it?

That question became the start of my Candy AI clone experiment.

The Curiosity Phase

At first, I treated it like a hobby project. I wasn’t chasing innovation; I was chasing understanding. I wanted to know what really happens behind those smooth conversations. Where does personality come from? How much of it is illusion? How much is design?

I set a simple rule for myself: no shortcuts and no marketing mindset. This wasn’t about growth hacks or monetization funnels. It was about pulling the curtain back.

That mindset led me to attempt a Fully Customized Candy AI Clone, not a copy-paste version, but something that reflected deliberate choices—tone, memory limits, emotional range, and conversational boundaries.

When the Tech Stops Being Abstract

The first surprise was how quickly things stopped being theoretical. Diagrams and documentation are comforting; real systems are not.

Once I started prototyping, every decision had consequences. Change the memory depth? Conversations felt richer—but slower. Adjust response creativity? The AI became charming, then unpredictable. Add emotional mirroring? Suddenly, interactions felt uncomfortably personal.

This was the moment I understood that AI “personality” isn’t a single switch. It’s a fragile balance of constraints.

Some nights, I scrapped entire versions because the bot felt wrong. Not broken—just off. Too eager. Too distant. Too agreeable. Each failure taught me more than the successes.

The Human Cost of “Simple” Ideas

People often talk about tools like this as if they’re modular: plug in an API, design a UI, and you’re done. That illusion shattered fast.

Even without launching anything publicly, I had to think about infrastructure, moderation logic, and long-term behavior drift. I started tracking time and resources out of curiosity, which naturally raised the question of Candy AI Clone Cost—not in dollars alone, but in mental energy.

What drained me most wasn’t coding. It was decision fatigue. Every small tweak forced me to ask ethical questions I hadn’t anticipated. Should the AI simulate affection? Should it refuse certain emotional dependencies? Should it remember personal details across sessions?

There were no obvious answers, just trade-offs.

The Emotional Weight of Building “Companionship”

One unexpected side effect of the experiment was how attached I became to the system I was building. Debugging conversations meant reading hundreds of exchanges. Over time, patterns emerged—not in the AI, but in people.

Loneliness. Curiosity. Playfulness. Vulnerability.

It made me pause more than once and walk away for a day or two. When you build conversational AI, you’re not just shaping responses—you’re shaping experiences. That realization shifted how I approached the development process of candy ai like platform, making it less about features and more about responsibility.

I stopped asking, Can I build this? I started asking, Should I build this this way?

What I Learned About the Business Side (Without Building One)

Even though this wasn’t a commercial project, you can’t study a system like this without noticing economic gravity. Infrastructure costs don’t care about your intentions. APIs bill per token whether you’re experimenting or selling.

Observing other platforms gave me a clearer picture of how candy ai makes money, and it’s less magical than it looks. Subscriptions, usage tiers, premium interactions—it’s a familiar model, just wrapped in emotional UX.

Understanding that made me more critical as a user, not more cynical as a builder.

The Second Version Felt Different

Months in, I rebuilt the project from scratch. Less ambitious. More intentional.

The second iteration of my Fully Customized Candy AI Clone wasn’t smarter—but it was calmer. It knew when to pause. It set boundaries. It didn’t try to be everything.

That version finally felt like something I could explain without embarrassment. Not a product. Not a replacement for human connection. Just an experiment that respected its own limits.

Walking Away With More Questions Than Answers

I never launched it. I never marketed it. Eventually, I archived the repository and moved on.

But the experiment changed how I see AI conversations forever. I’m slower to be impressed now, and quicker to ask what trade-offs were made to create a seamless illusion.

Behind every smooth reply is a stack of decisions someone wrestled with. Behind every “personality” is a rule set pretending not to exist.

And behind the scenes of my Candy AI clone experiment wasn’t genius or disruption—just curiosity, doubt, and a lot of late nights wondering where the line between tool and companion really belongs.

If you need guidance building a Candy AI–style platform, connect with experienced AI experts for consultation and cost insights.

Related blogs - https://developers.infolink.skysite.com/discuss/6981b82cb2590e068c0a3a7c

https://support.billsby.com/discuss/6981a565d5e2e95c3069615b

https://devpost.com/software/created-candy-ai-clone-from-scratch-personal-experience

https://community.wandb.ai/t/the-complete-development-blueprint-behind-candy-ai-like-companion-platforms/18175

https://community.deeplearning.ai/t/how-to-develop-a-candy-ai-style-virtual-companion-platform-from-scratch/887211/1

https://www.reddit.com/r/SaaS/s/cJFDPhlRBp

https://ideas.digitalocean.com/app-platform/p/candy-ai-clone-the-future-of-emotionally-intelligent-ai-chatbots

https://manifold.markets/SandeepAnand/what-problems-faced-when-i-started